Cubitts, a glasses retailer, announced that they’re going to be using iPhone’s camera to scan faces to assist in buying glasses. I’m interested of the privacy implications of this feature.

At first it seems like a cool idea. But I’m deeply skeptical of any implementation that requires such personal information for such a trivial use-case.

I wanted to look into the privacy implications and whether I could justify the usefulness of the feature.

Note that this isn’t a criticism of Cubitts. I don’t disagree with the feature in general, but I’m skeptical of the execution which makes me reluctant to use it.

- What the privacy policy says

- Security

- Machine learning

- Influencing future design

- Other missing pieces

- So what?

- What to do about it?

- e: Potential for loss

What the privacy policy says

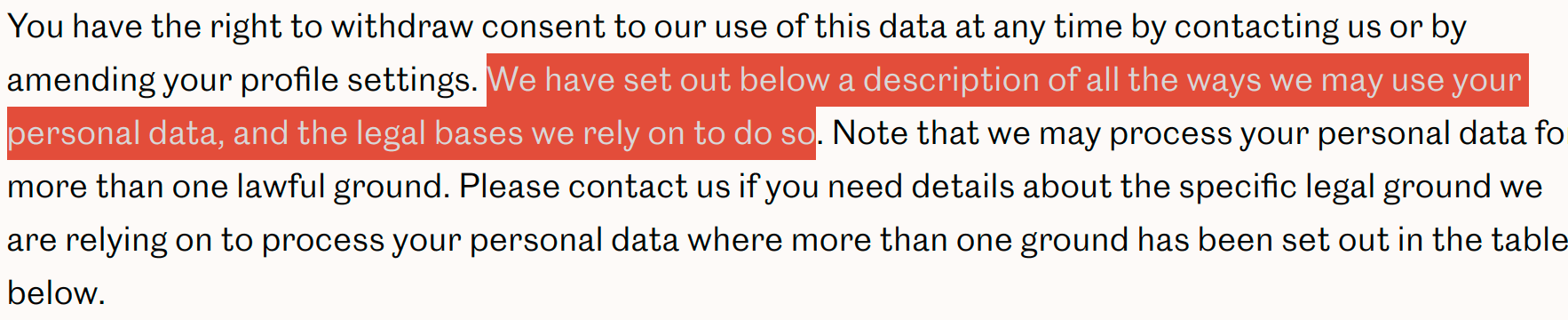

Firstly, going to their privacy policy, there are eight mentions of “biometric”, which is a good start. At the very least they’re conscious that this is a big privacy issue.

OK, so they explicitly say what they collect. That’s good. It’s all the data though, that’s not so good.

They use the TrueDepth camera. That makes sense.

🚩 The data is transmitted to their servers, “so that we can check that the product will be a comfortable fit.” I was hoping they did more computing locally rather then transmitting the biometric information. That is, instead of sending the facial dimensions, they could have downloaded the dimensions of supported frames and then queried them locally.

You can’t use the feature unless you register. That seems strange. Perhaps that’s a legal requirement.

It’s good that they explicitly mention that data is not used for identification purposes because that means that they’re at least somewhat aware of the regulations. In fact, in the EU (and I suppose in the UK at least for the time being) doing so is illegal unless overwhelmingly required. For instance, it can’t even be used for employee clock-in because there are other equally convenient ways to do so.

They explain how they use data.

🚩 Obviously a hasty addition given the font doesn’t match.

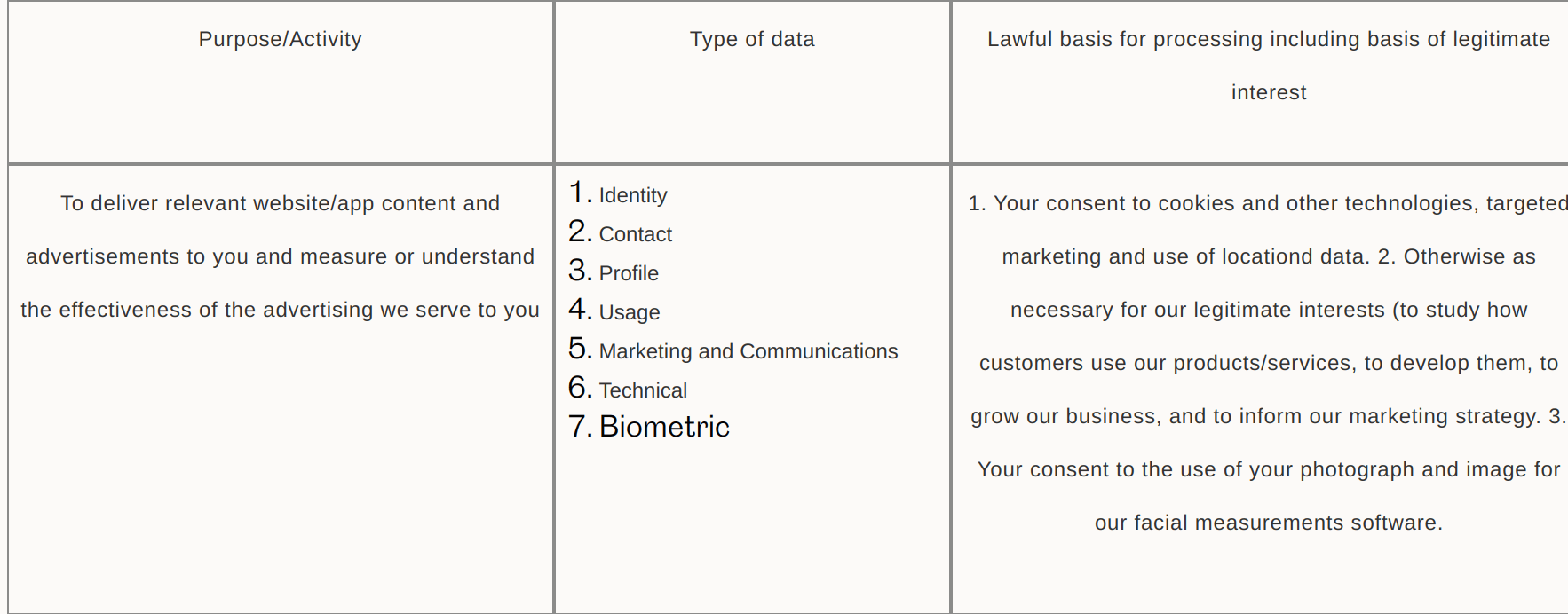

🚩 They’re going to use the facial measurements to serve ads and “measure effectiveness”

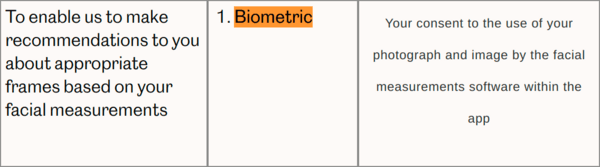

This is could be considered the supplement to the core functionality. They could suggest frames that fit your facial features. That’s not unreasonable. What is less reasonable is that the recommendations are generated server-side.

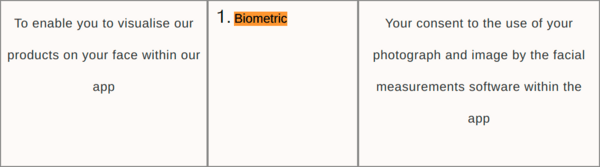

This is the core functionality, I suppose.

🚩 This section is a bit ambiguous and slightly concerning because they mention health and biometric data separately. They go on to explicitly say that you can revoke consent to use of health data. But it is not clear that applies to the biometric data as well. Might just be poor wording.

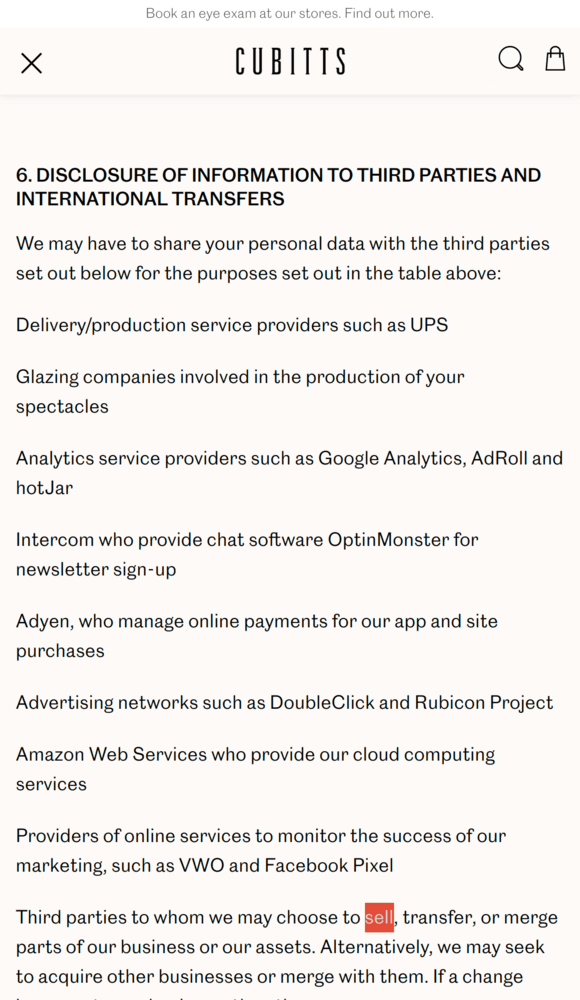

🚩 They don’t exclude selling your data and give you no choice to opt out of that.

Security

I’m not part of the company so in this section I make assumptions that could very well be wrong.

Firstly, the security section in the privacy policy is entirely generic and that’s not very reassuring.

Secondly, I noticed that the site runs on Sylius. This is both good and bad.

It’s good because it means it’s a product rather than a half-baked homegrown solution. It’s likely not to have any obvious security vulnerabilities that script kiddies could exploit.

However, it might be bad because it might be run in-house. That means that there could potentially be issues with infra setup that could be exploited, such as unsecured buckets or public facing MySQL.

Running on an existing platform also means that Cubitts is probably more of a glasses retailer than a tech company. This makes me wary of giving too much of my personal data.

Machine learning

In the Dezeen article there is this quote:

However, in time, the app could use its machine-learning capabilities to analyse purchases made by users. This would allow it to better predict which frame a user is more likely to choose in terms of style.

“We will get to that, but it will take time because we have to train the machine learning algorithm. The more people use it, the more powerful it gets.”

🚩 There is no mention of this whatsoever in the privacy policy or any way to opt out.

🚩 So, even if your facial measurements might not be sold, a model that includes your facial measurements might exist and might be sold.

This is similar to the issue of Github Copilot learning from MIT code, but with your face.

Influencing future design

Another thing the article mentions that the privacy policy doesn’t is that the company plans to influence the design of new collections:

Cubitts could potentially use data gathered by the app to influence the design of new collections, creating frames that cater to as wide a customer base as possible.

🚩 Again, no mention of this or a way to to opt-out in the privacy policy.

Other missing pieces

🚩 There is no clear explanation of the format in which the data will be stored. Is it possible to reconstruct your facial features from the information stored?

🚩 There is also no explanation on the controls behind who can access your data. Is it in the main database that anyone can access? Is it encrypted so only some team members can access it? Is it in an entirely separate database?

So what?

Well, there are two issues: the understanding of the average user and the incomplete information provided.

The average user

The average user only rarely (or never) checks the privacy policies and probably doesn’t entirely understand its implications.

Scanning their face to try glasses on might be just a cool feature but few have a real understanding of what that means.

So, they might just try it once and then have their biometric information forever captured and sold on.

There might be mechanisms for them to get it back, or deleted, but the average user is rarely aware that a) the information was captured, stored and sold on and b) that they can do something about it.

Pessimistically, 30 years from now this info can crop up somewhere and suggest the glasses that they tried on now or worse.

At the risk of sounding overly hyperbolic this is similar to Cambridge Analytica situation.

Tech savvy people didn’t fill in quizzes asking them for detailed personal information and they will not scan their faces.

But regular users did fill out quizzes and will scan their faces. And that makes all of us worse off.

Incomplete information provided to users

There is incomplete information provided to the users regarding building a machine learning model and using a repository of information for future designs.

Furthermore, the user does not seem to be given any way to opt out of either these.

This should not be acceptable. It’s imperative to be entirely upfront about what the data is being used for and give the users as much choice as possible.

What to do about it?

Ensuring everyone’s privacy must be a two-pronged approach.

The first is government regulation on what is and isn’t acceptable. To a degree that’s already in place, or at least in progress. One piece that is still missing, however, is regulation for personal data being used for machine learning. This should be part of the next wave of regulation in which the users have to explicitly opt-in to having their data ingested.

The second is educating users. And nowhere near enough effort is being put into that.

The good news is that education can be done by everyone. The main thing to do is to point out privacy issues to less tech-savvy people around you.

Try to explain just how easily data is captured, used (and abused) and moved between companies.

For biometric data in particular, stress just how sensitive it is and that it should not be given away for anything but the most critical of uses.

But I do think that there should be broader, even government backed, initiatives to inform users.

What Cubitts should do

I’d strongly recommend that they restructure their app only store the biometric information locally.

They’d still be able to provide the same service by downloading the frame dimensions, instead of uploading data.

They would also have the biometric information as long as the user doesn’t delete the data for their app so they could still recommend frames, but again locally.

In order to build the ML model they’re working on, first they should make it opt-in and they should clearly describe the privacy implications. Secondly, they should use the data only for the model and once it’s ingested it shouldn’t be stored individually.

They could also still influence future design by collecting anonymous data on whether a frame is found suitable or unsuitable. Based on aggregates of frames suitable or not they could make very well informed decisions on whether the proportions of a frame are good or not and make adjustments based on that.

Potential for an API

Perhaps a biometric repository startup might be a good idea.

It would be a service that complies with the highest security measures, like a bank and/or hospital.

Through that service you could selectively, and temporarily, give access to certain parts of your biometric data.

That way, instead of having you scan your entire face, they could request just the data and you could approve only specific measurements.

e: Potential for loss

Loren, via email, made a very good point that another issue with scanning your face is the potential for that data to be lost or stolen.